Hosting a competition

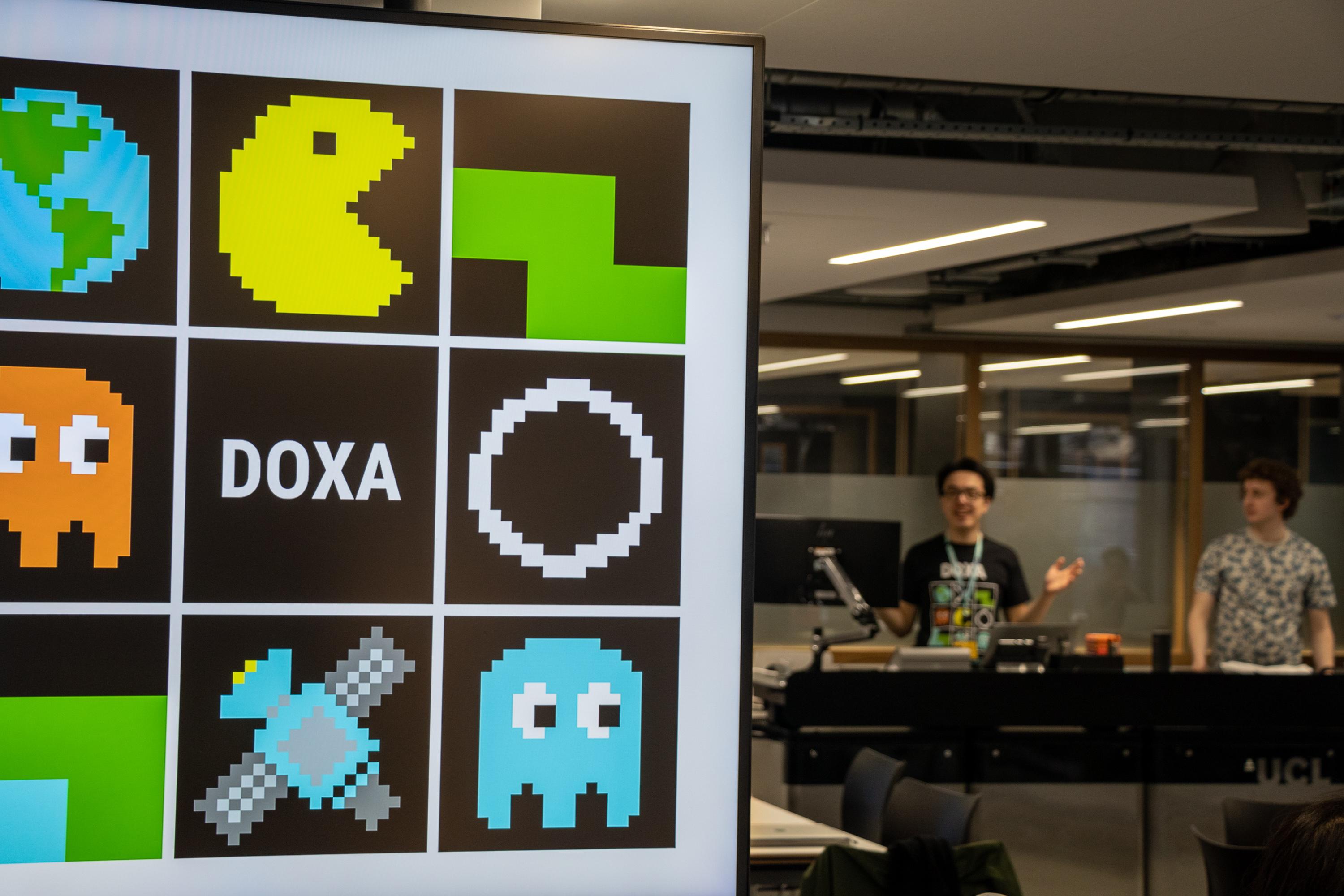

DOXA AI helps enterprises, non-profits and research institutions engage with an international community of talented data scientists and machine learning enthusiasts tackling grand challenges in AI and building the future. 🚀

Solve your most pressing AI challenges ⚡

DOXA AI competitions let you benefit from potentially thousands of community submissions to help you crowdsource solutions for your most pressing machine learning R&D challenges.

Connect with a talented global AI community 🌍

If you're hiring, hosting an open data science challenge on our platform is a unique opportunity to engage with our best participants platform-wide and identify key talent from a diverse range of backgrounds and industries internationally.

Catalyse research, development & innovation in AI 💡

Running a competition on our platform is a direct way to develop community interest and expertise in solving grand challenges with positive real-world impact while contributing to advancing the cutting edge of AI and machine learning.

Styles of competition

We support multiple types of competition on the DOXA AI platform, but in all cases, we evaluate participants' work directly on the platform and show how they rank on a dynamic real-time competition scoreboard. 🏆

Get in touch 😎

If you're interested in hosting a challenge on the DOXA AI platform, using our AI infrastructure services or finding out more about our work, definitely reach out to us!

Contact us